Correlation Between Independent Reading and Test Scores

Testing the Significance of Correlations

- Comparison of correlations from contained samples

- Comparing of correlations from dependent samples

- Testing linear independence (Testing confronting 0)

- Testing correlations against a fixed value

- Calculation of confidence intervals of correlations

- Fisher-Z-Transformation

- Calculation of the Phi correlation coefficient rPhi for categorial data

- Calculation of the weighted mean of a list of correlations

- Transformation of the upshot sizes r, d, f, Odds Ratioand eta square

- Calculation of Linear Correlations

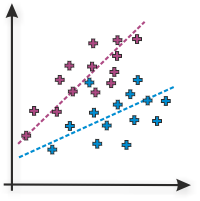

1. Comparing of correlations from independent samples

Correlations, which have been retrieved from different samples tin can be tested against each other. Example: Imagine, yous desire to test, if men increase their income considerably faster than women. You could f. e. collect the data on age and income from 1 200 men and 980 women. The correlation could amount to r = .38 in the male cohort and r = .31 in women. Is there a significant difference in the correlation of both cohorts?

| due north | r | |

| Correlation 1 | ||

| Correlation ii | ||

| Test Statistic z | ||

| Probability p | ||

(Calculation according to Eid, Gollwitzer & Schmidt, 2011, pp. 547; single sided exam)

2. Comparison of correlations from dependent samples

If several correlations accept been retrieved from the same sample, this dependence within the information can be used to increase the power of the significance test. Consider the following fictive case:

- 85 children from grade 3 accept been tested with tests on intelligence (1), arithmetic abilities (2) and reading comprehension (three). The correlation between intelligence and arithmetic abilities amounts to r 12 = .53, intelligence and reading correlates with r 13 = .41 and arithmetic and reading with r 23 = .59. Is the correlation between intelligence an arithmetics abilities higher than the correlation between intelligence and reading comprehension?

| due north | r 12 | r thirteen | r 23 |

| Exam Statistic z | |||

| Propability p | |||

(Adding according to Eid et al., 2011, Southward. 548 f.; unmarried sided testing)

3. Testing linear independence (Testing against 0)

With the following calculator, you can test if correlations are different from zero. The test is based on the Student's t distribution with n - 2 degrees of freedom. An example: The length of the left foot and the olfactory organ of xviii men is quantified. The length correlates with r = .69. Is the correlation significantly different from 0?

| n | r |

| Test Statistic t | |

| Propability p (single-sided) | |

| Propability p (two-sided) |

(Calculation according to Eid et al., 2011, S. 542; ii sided exam)

iv. Testing correlations against a fixed value

With the following estimator, you tin can examination if correlations are dissimilar from a fixed value. The exam uses the Fisher-Z-transformation.

| northward | r | ρ (value, the correlation is tested against) |

| Exam Statistic z | ||

| Propability p | ||

(Calculation according to Eid et al., 2011, Due south. 543f.; two sided test)

five. Adding of confidence intervals of correlations

The confidence interval specifies the range of values that includes a correlation with a given probability (confidence coefficient). The higher the confidence coefficient, the larger the confidence interval. Ordinarily, values around .9 are used.

| n | r | Conviction Coefficient | |

| Confidence interval | |||

(Calculation according Eid et al., 2011, S. 545f.; two sided test)

The calculation becomes imprecise with large sample sizes and extreme correlation values due to the restricted precision of floating point numbers in Javascript.

6. Fisher-Z-Transformation

The Fisher-Z-Transformation converts correlations into an nigh normally distributed measure. Information technology is necessary for many operations with correlations, f. e. when averaging a list of correlations. The following converter transforms the correlations and it computes the inverse operations as well. Delight note, that the Fisher-Z is typed capital letter.

| Value | Transformation | Result |

7. Calculation of the Phi correlation coefficient rPhi for binary data

rPhi is a mensurate for binary information such every bit counts in dissimilar categories, e. g. pass/fail in an exam of males and females. It is also called contingency coefficent or Yule's Phi. Transformation to dCohen is done via the effect size estimator.

| Grouping ane | Group two | |

| Category i | ||

| Category 2 | ||

| rPhi | ||

| Effect Size dcohen | ||

8. Calculation of the weighted mean of a list of correlations

Due to the beveled distribution of correlations(meet Fisher-Z-Transformation), the hateful of a listing of correlations cannot simply be calculated past building the arithmetics mean. Commonly, correlations are transformed into Fisher-Z-values and weighted by the number of cases before averaging and retransforming with an inverse Fisher-Z. While this is the usual approach, Eid et al. (2011, pp. 544) propose using the correction of Olkin & Pratt (1958) instead, as simulations showed it to estimate the mean correlation more precisely. The post-obit calculator computes both for you, the "traditional Fisher-Z-approach" and the algorithm of Olkin and Pratt.

Please fill up in the correlations into cavalcade A and the number of cases into column B. You can as well copy the values from tables of your spreadsheet program. Finally click on "OK" to start the calculation. Some values already filled in for demonstration purposes.

9. Transformation of the effect sizes r, d, f, Odds Ratioand eta foursquare

Correlations are an effect size measure. They quantify the magnitude of an empirical upshot. In that location are a number of other effect size measures as well, with d Cohen probably beingness the nearly prominent one. The different effect size measures can exist converted into another. Delight have a await at the online calculators on the page Computation of Effect Sizes.

10. Adding of Linear Correlations

The Online-Calculator computes linear pearson or product moment correlations of 2 variables. Please fill in the values of variable 1 in column A and the values of variable 2 in cavalcade B and press 'OK'. As a sit-in, values for a loftier positive correlation are already filled in past default.

| Information | linear Correlation r Pearson | Decision coefficient r 2 | Interpretation |

Literature

Many hypothesis tests on this folio are based on Eid et al. (2011). jStat is used to generate the Student's t-distribution for testing correlations against each other. The spreadsheet element is based on Handsontable.

- Eid, K., Gollwitzer, M., & Schmitt, M. (2011). Statistik und Forschungsmethoden Lehrbuch. Weinheim: Beltz.

Delight use the following citation: Lenhard, Due west. & Lenhard, A. (2014). Hypothesis Tests for Comparing Correlations. available: https://www.psychometrica.de/correlation.html. Bibergau (Germany): Psychometrica. DOI: x.13140/RG.ii.1.2954.1367

Correlation Between Independent Reading and Test Scores

Source: https://www.psychometrica.de/correlation.html